Overfitting is a huge problem, especially in deep neural networks. If you suspect your neural network is overfitting your data. There are quite some methods to figure out that you are overfitting the data, maybe you have a high variance problem or you draw a train and test accuracy plot and figure out that you are overfitting. One of the first things you should try out, in this case, is regularization.

All the LaTex Code used in this blog is compiled on the GitHub repo for this blog: Rishit-dagli/Solving-overfitting-in-Neural-Nets-with-Regularization Learn how to use regularization to solve overfitting and the intuitions behind it …github.com

Why Regularization?

The other way to address high variance is to get more training data that is quite reliable. If you get some more training data, you can think of it in this manner that you are trying to generalize your weights for all situations. And that solves the problem most of the time, so why anything else? But a huge downside with this is that you can not always get more training data, it could be expensive to get more data and sometimes it just could not be accessible.

It now makes sense for us to discuss some methods which would help us reduce overfitting. Adding regularization will often help to prevent overfitting. Guess what, there is a hidden benefit with this, often regularization also helps you minimize random errors in your network. Having discussed why the idea of regularization makes sense, let us now understand it.

Understanding L₂ Regularization

We will start by developing these ideas for the logistic function. So just recall that you are trying to minimize a function J called the cost function which looks like this-

And your w is a matrix of size x and L is the loss function. Just a quick refresher, nothing new.

So, to add regularization for this we will add a term to this cost function equation, we will see more of this-

So λ is another hyperparameter that you might have to tune, and is called the regularization parameter.

According to the ideal convention ||w||²₂ just means the Euclidean L₂ norm of w and then just square it, let us summarise this in an equation so it becomes easy for us, we will just simplify the L₂ norm term a bit-

I have just expressed it in the terms of the squared euclidean norm of the vector prime to vector w . So the term we just talked about is called L₂ regularization. Don’t worry, we will discuss more about how we got the λ term in a while but at least you now have a rough idea of how it works. There is actually a reason for this method called “L₂ normalization”, it is just called so because we are computing the L₂ norm of w .

Till now, we discussed regularization of the parameter w , and you might have asked yourself a question, why only w ? Why not add a term with b ? And that is a logical question. It turns out in practice you could add a w term or do it for w but we usually just omit it. Because if you look at the parameters, you would notice that w is usually a pretty high dimensional vector and particularly has a high variance problem. Understand it as w just has a lot of individual parameters, so you aren’t fitting all the parameters well, whereas b is just a single number. So almost all of your major parameters are in w rather than in b . So, even if you add that last b term to your equation, in practice it would not make a great difference.

L₁ Regularization

We just discussed about L₂ regularization and you might also have heard of L₁ regularization. So L₁ regularization is when instead of the term we were earlier talking about you just add L₁ norm of the parameter vector w . Let’s see this term in a mathematical way-

If you use L₁ regularization, your w might end up being sparse, what this means is that your w vector will have a lot of zeros in it. And it is often said that this can help with compressing the model, because the set of parameters are zero, and you need less memory to store the model. I feel that, in practice, L₁ regularization to make your model sparse, helps only a little bit. So I would not recommend you to use this, not at least for model compression. And when you train your networks, L₂ regularization is just used much much more often.

Extending the idea to Neural Nets

We just saw how we would do regularization for the logistic function and you now have a clear idea of what regularization means and how it works. So, now it would be good to see how these ideas can extend to neural nets. So, recall or cost function for a neural net, it looks something like this:

So, now recall what we added to this while we were discussing this earlier we added the regularization parameter λ, a scaling parameter and most importantly the L₂ norm so we will do something similar instead we will just sum it for the layers. So, the term we add would look like this:

Let us now simplify this L₂ norm term for us, it is defined as the sum of the i over sum of j, of each of the elements of that matrix, squared:

What the second line here tells you is that your weight matrix or w here is of the dimensions nˡ, nˡ⁻¹ and these are the number of units in layer l and l-1 respectively. This is also called the “Frobenius norm” of a matrix, Frobenius norm is highly useful and is used in a quite a lot of applications the most exciting of which is in recommendation systems. Conventionally it is denoted by a subscript “F”. You might just say that it is easier to call it just L₂ norm but due to some conventional reasons we call it Frobenius norm and has a different representation-

||⋅||₂² - L₂ norm

||⋅||²_F - Frobenius norm

Implementing Gradient Descent

So, earlier when we would do is compute dw using backpropagation, get the partial derivative of your cost function J with respect to w for any given layer l and then you just update you wˡ and also include the α parameter. Now that we have got our regularization term into our objective, so we will simply add a term for the regularization. And, these are the steps and equations we made for this-

So, the first step earlier used to be just something received from backpropagation and we now added a regularization term to it. The other two steps are pretty much the same as you would do in any other neural net. This new dw[l] is still a correct definition of the derivative of your cost function, with respect to your parameters, now that you have added the extra regularization term at the end. For this reason that L₂ regularization is sometimes also called weight decay. So, now if you just take the equation from step 1 and substitute it in step 3 equation-

So, what this shows is whatever your matrix w[l] is you are going to make it a bit smaller. This is actually as if you are taking the matrix w and you are multiplying it by 1 - α λ/m.

So this is why L₂ norm regularization is also called weight decay. Because it is just like the ordinally gradient descent, where you update w by subtracting α times the original gradient you got from backpropagation. But now you’re also multiplying w by this thing, which is a little bit less than 1. So, the alternative name for L₂ regularization is weight decay. I do not use this term often, but you now know how the intuition for its name came.

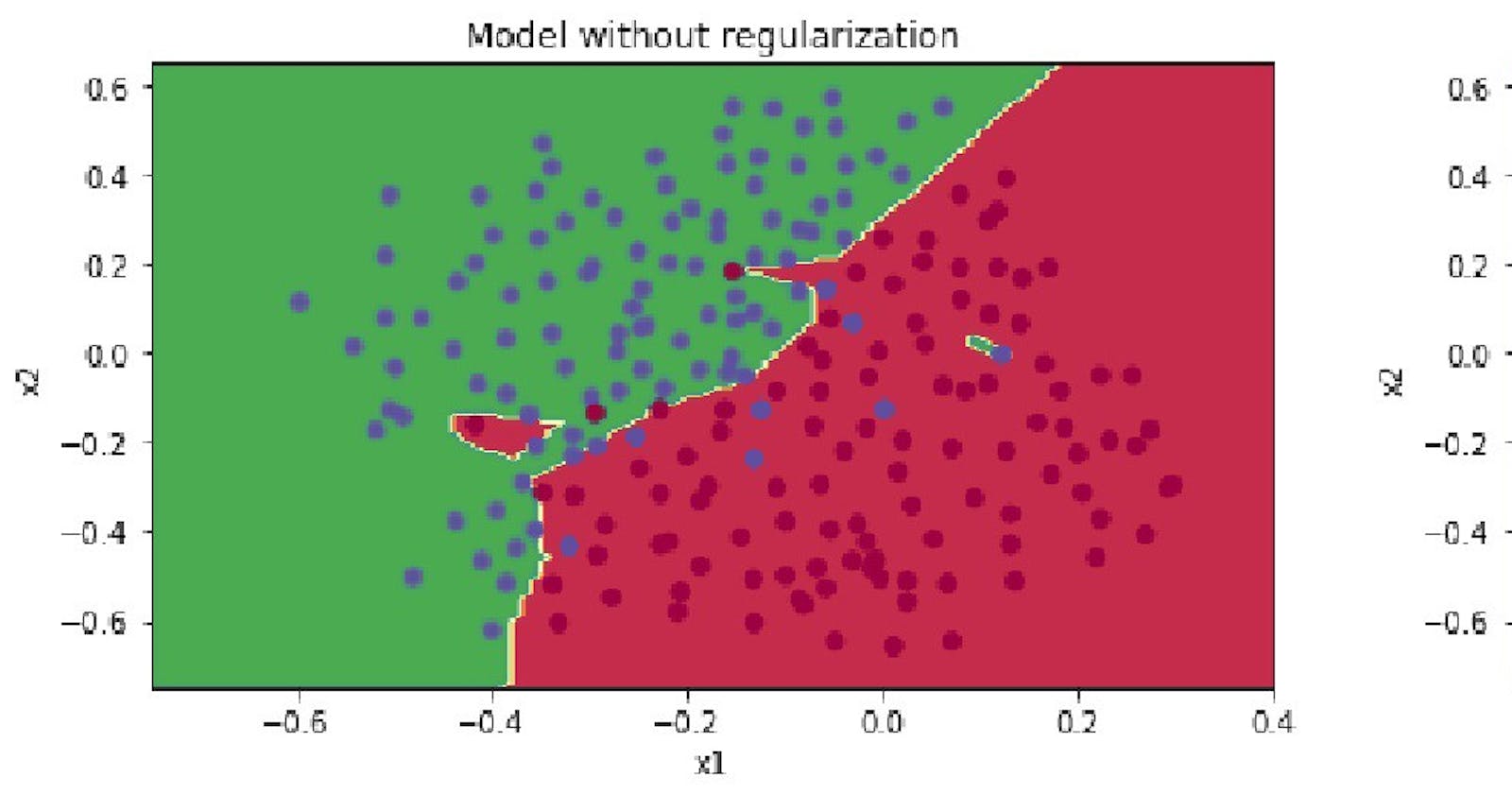

Why Regularization reduces overfitting

When implementing regularization we added a term called Frobenius norm which penalises the weight matrices from being too large. So, now the question to think about is why does shrinking the Frobenius norm reduce overfitting?

Idea 1

An idea is that if you crank regularisation parameter λ to be really big, they’ll be really incentivized to set the weight matrices w to be reasonably close to zero. So one piece of intuition is maybe it set the weight to be so close to zero for a lot of hidden units that’s basically zeroing out a lot of the impact of these hidden units. And if that is the case, then the neural network becomes a much smaller and simplified neural network. In fact, it is almost like a logistic regression unit, but stacked most probably as deep. And so that would take you from the overfitting case much closer to the high bias case. But hopefully, there should be an intermediate value of λ that results in an optimal solution. So, to sum up you are just zeroing or reducing out the impact of some hidden layers and essentially a simpler network.

The intuition of completely zeroing out of a bunch of hidden units isn’t quite right and does not work too good in practice. It turns out that what actually happens is we will still use all the hidden units, but each of them would just have a much smaller effect. But you do end up with a simpler network and as if you have a smaller network that is therefore less prone to overfitting.

Idea 2

Here is another intuition or idea to regularization and why it reduces overfitting. To understand this idea we take the example of tanh activation function. So, our g(z) = tanh(z) .

Here notice that if z takes on only a small range of parameters, that is |z| is close to zero, then you’re just using the linear regime of the tanh function. If only if zis allowed to wander up to larger values or smaller values or |z| is farther from 0, that the activation function starts to become less linear. So the intuition you might take away from this is that if λ, the regularization parameter, is large, then you have that your parameters will be relatively small, because they are penalized being large into a cost function.

And so if the weights of ware small then because z = wx+b but if w tends to be very small, then z will also be relatively small. And in particular, if z ends up taking relatively small values, it would cause of g(z) to roughly be linear. So it is as if every layer will be roughly linear like linear regression. This would make it just like a linear network. And so even a very deep network, with a linear activation function is at the end only able to compute a linear function. This would not make it possible to fit some very complicated decisions.

If you have a neural net and some very complicated decisions you could possibly overfit and this could definitely help to reduce your overfitting.

A tip to remember

When you implement gradient descent, one of the steps to debug gradient descent is to plot the cost function J as a function of the number of elevations of gradient descent and you want to see that the cost function J decreases monotonically after every elevation of gradient descent. And if you’re implementing regularization then remember that J now has a new definition. If you plot the old definition of J, then you might not see a decrease monotonically. So, to debug gradient descent make sure that you’re plotting this new definition of J that includes this second term as well. Otherwise, you might not see J decrease monotonically on every single elevation.

I have found regularization pretty helpful in my Deep Learning models and have helped me solve overfitting quite a few times, I hope they can help you too.

About Me

Hi everyone I am Rishit Dagli

LinkedIn — linkedin.com/in/rishit-dagli-440113165/

Website — rishit.tech

If you want to ask me some questions, report any mistakes, suggest improvements, or give feedback you are free to do so by emailing me at —

hello@rishit.tech