source: oreilly.com

One of the problems of training neural network, especially very deep neural networks, is data vanishing or even exploding gradients. What that means is that when you are training a very deep network your derivatives or your slopes can sometimes get either very very big or very very small, maybe even exponentially small, and this makes training a lot more difficult. This further could even take more time to reach the convergence. We will here see what this problem of exploding or vanishing gradients really means, as well as how you can use careful choices of the random weight initialization to significantly reduce this problem.

Defining the problem

Let us say you are training a very deep neural network like this, I have have shown image of it as if you have only have a few hidden units per layer, and a couple of hidden layers but it could be more as well.

So, following the conventional standards we will call our weights for L[1] as w[1], weights for L[2] as w[2] similarly all the way up to the weights for layer L[n] as w[n]. Further to make things simple at this stage let us say we are using a linear activation function such thatg(z) = z . For now, let us all also ignore the b and say that b[l] = 0 for alll ∈ (1, n).

In this case we can now simply write-

Now let us make some wonderful observations here, as b = 0,

So, let us now make an expression for a[2], so a[2] would be

So, with this and the earlier equation we get that a[2] = w[1] w[2] X, continuing this a[3] = w[1] w[2] w[3] X and so on.

Exploding Gradients

Let’s now just say that all our w[l] are just bigger than identity. As our w of course are matrices it would be better to put them just bigger than identity matrix except w[l] which would have different dimensions than the rest of w’s. The thing we just now defined as something greater than identity matrix let that be called C = 1.2 ⋅ I where I is a 2 X 2 identity matrix. With this we can now easily simplify the above expression to be-

if L was large as it happens for very deep neural network, y-hat will be very large. In fact, it just grows exponentially, it grows like 1.2 raised to the number of layers. And so if you have a very deep neural network, the value of y will just explode.

Vanishing Gradients

Now conversely if we replace the 1.2 here with 0.5 which is something less than 1. This makes our C = 0.5 ⋅ I . So now you again have 0.5ˡ⁻¹. So, with this the activation values will decrease exponentially as a function of the number of layers L of the network. So in a very deep network, the activations end up decreasing exponentially.

So the intuition I hope you can take away from this is that at the weights w, if they’re all just a little bit bigger than one or just a little bit bigger than the identity matrix, then with a very deep network the activations can explode. And if w is just a little bit less than identity and you have a very deep network, the activations will decrease exponentially. And even though I went through this argument in terms of activations increasing or decreasing exponentially as a function of L, a similar argument can be used to show that the derivatives or the gradients the computer is going to send will also increase exponentially or decrease exponentially as a function of the number of layers.

Why care?

In today’s era for a lot of these tasks you might require a Deep Neural Network with maybe 152 or 256 layer network, in that case these values could get really big or really small. Which would impact your training drastically and make it a lot more difficult. Just consider this scenario, your gradients are exponentially smaller than L, then gradient descent will take tiny little steps and take a long time for gradient descent to learn anything or converge. You just saw how deep networks suffer from the problems of vanishing or exploding gradients. It would now be wonderful and see how we could solve this problem with careful initialization of weights, let us see how we would do that.

Initialization of weights

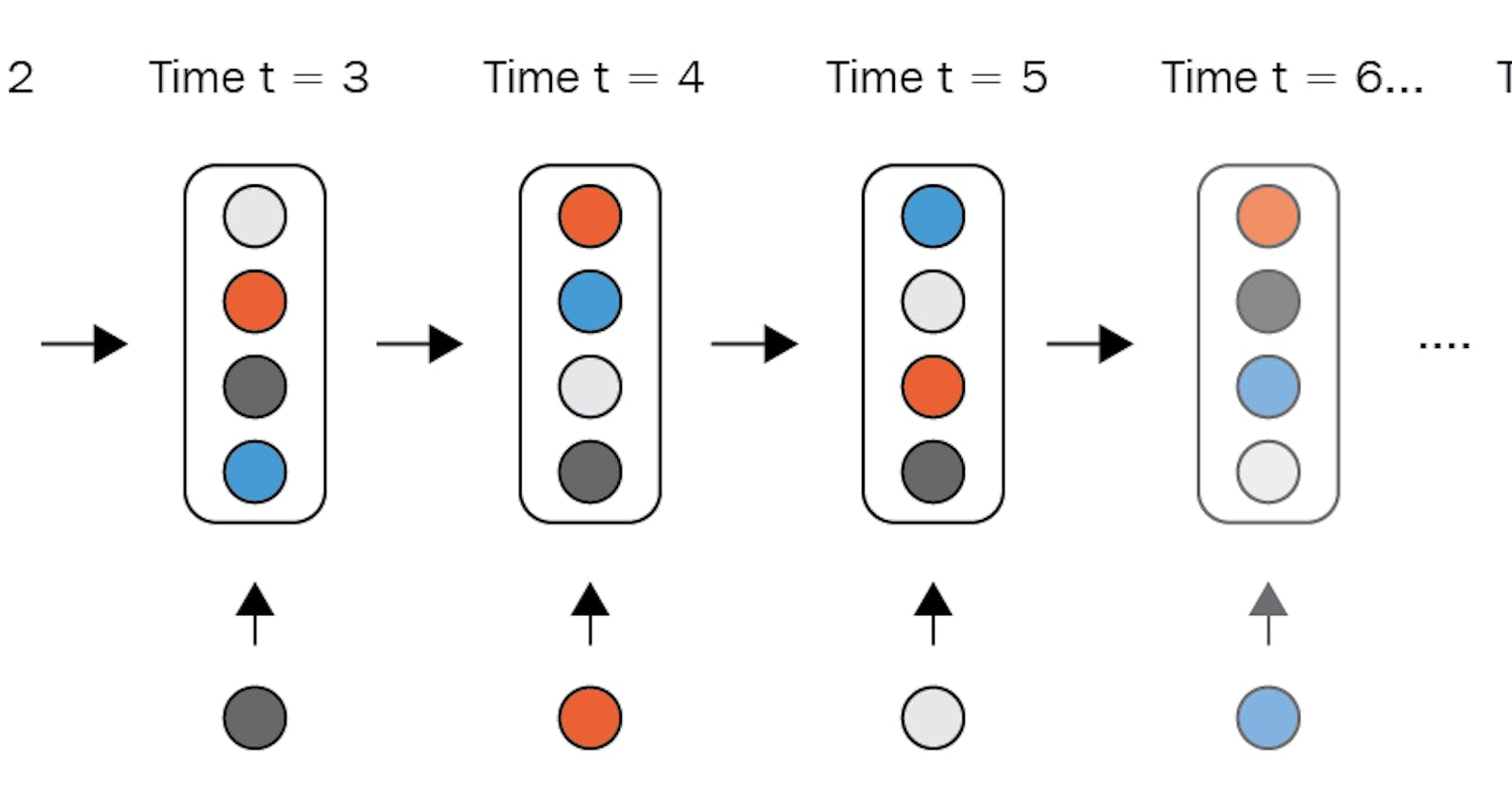

It turns out that a solution for this which helps a lot is more careful choice of the random initialization for your neural network. Let us understand this with the help of a single neuron first. Something like this-

So, you have your inputs x1 to x4 and then some function a = g(z) , which outputs your y . Pretty much the case with any neuron. So, later when we go on to generalize this the x ‘s would replaced by some layer a[l] but for now we will stick to x . Not something new, but let us just make a formula to clear our understanding. Note for now we are just ignoring b by setting b = 0 .

So in order to make z not blow up or not become too small, you notice that the larger n is, the smaller you want your w[i] to be as z is the sum of w[i] and x[i] where n are the number of input features going in your neuron which is in this example 4. And when you are adding a lot of these terms which is a large n you want each term to be smaller. You can now do a reasonable thing here which is to set the variance (Note: Variance is here represented according to conventional standards as var()) of w[i] to be the reciprocal of your n . Which simply means var(w[i]) = 1/n . So, what you would do in practice is-

Please note the S here refers to the shape of the matrix.

As n[l-1] is the number of inputs the neuron at layer l is going to get. If you are using thee most common ReLu activation function it is observed that instead of setting the variance to be 1/n you should set the variance to be 2/n instead. So let us summarise it-

So, if you are familiar with random variables you might now see as all of this comes together. If you take like a Gaussian random variable and multiplying it by the square root of 2/n[l-1] would give me a variance equal to 2/n . And if you noticed we earlier were talking in terms of n and now moved to n[l-1] to generalise it for any layer l as the layer l would have n[l-1] input features for each unit or each neuron in that layer.

And now you have a standard variance of 1 and this would cause your z to be on a similar scale too. With this your w[l] is not too much bigger than 1 and not too less than 1. Now, this would definitely help your gradients not to vanish or explode too quickly.

Variants

What we just did was assuming a ReLu activation function or a linear activation function. So let us see what would happen if you are using some other activation function.

- tanh

There is a wonderful paper that shows that when you are using hyperbolic tan or simply tanh it would be a better idea to multiply it by the root of 1/n[l-1] instead of 2/n[l-1] . What I mean is you would have an equation something like this-

However, we will not prove this result here. This is more commonly referred to as Xavier initialization or Glorot initialization after the name of it’s discoverers.

There has also been a variant of this which again has a theoretical justification and can be seen in few papers too-

However I would recommend you to use Formula (2) when you are using ReLu which is the most common activation function, if you are using tanh you could try these both out too.

Making it better

There is one more idea which might help you make your initialization even better. Consider Formula (2) and let us add a parameter γ to it, not be confused with the SVM hyperparameter γ. We will multiply this to our original formula-

And now you could make γ another hyperparameter for you to tune but beware that γ might not have a very huge or drastic change in results, so it would not be a hyperparameter that you would want to go tuning first. However, it may give you minor improvements.

Implementing with TensorFlow

You can easily implement these initializers with some Deep Learning frameworks, here I will be showing how you could do this with TensorFlow. We will be using TensorFLow 2.x for the purpose of example.

- Glorot normal or Xavier normal

import tensorflow as tf

tf.keras.initializers.glorot_normal(seed=**None**)

It draws samples from a truncated normal distribution centered on 0 with stddev = sqrt(2 / (fan_in + fan_out)) where fan_in is the number of input units in the weight tensor and fan_out is the number of output units in the weight tensor.

- Glorot uniform or Xavier uniform

import tensorflow as tf

tf.keras.initializers.glorot_uniform(seed=**None**)

It draws samples from a uniform distribution within [-limit, limit] where limit is sqrt(6 / (fan_in + fan_out)) where fan_in is the number of input units in the weight tensor and fan_out is the number of output units in the weight tensor.

Both of these code snippets return an initializer object and you could very easily use it. You also saw how easy it was to do this with TensorFlow.

Concluding

I hope this showed you some idea about the problem of vanishing or exploding gradients as well as choosing a reasonable scaling for how you initialize the weights. This would make your weights not explode too quickly and not decay to zero too quickly, so you can train a sufficiently deep network without the weights or the gradients exploding or vanishing too much. When you train deep networks, this is another trick that will help you make your neural networks train much more quickly.

About Me

Hi everyone I am Rishit Dagli

LinkedIn — linkedin.com/in/rishit-dagli-440113165/

Website — rishit.tech

If you want to ask me some questions, report any mistake, suggest improvements, give feedback you are free to do so by mailing me at —

hello@rishit.tech